From June 17 to 20, the International Conference on Mathematical Neuroscience (ICMNS) held its 10th anniversary edition in Barcelona, drawing more than 150 participants from over 25 countries. Hosted at the Parc de Recerca Biomèdica de Barcelona (PRBB), this year’s conference confirmed its place as a major international gathering for those working at the intersection of mathematics, neuroscience, and theory-driven biology.

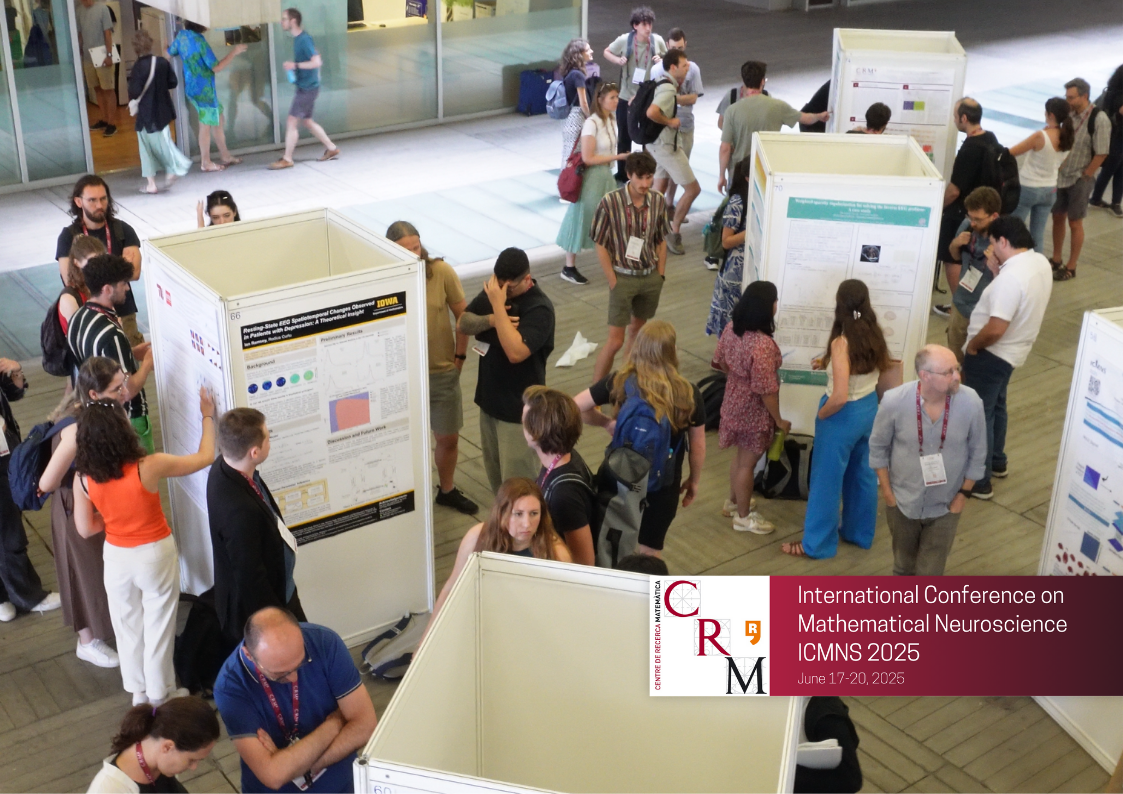

Organised by the Centre de Recerca Matemàtica (CRM), the 2025 edition featured a scientific programme that included 44 contributed oral presentations and more than 80 posters, complementing keynote lectures, invited talks, and various informal exchanges. The talks and posters explored topics such as neural coding, network dynamics, plasticity and learning, cognitive representations, field models, and the biophysics of information processing. With parallel sessions held in the PRBB Auditorium and the Marie Curie Room, the conference maintained a steady rhythm of discussion and cross-disciplinarity.

Each morning began with a keynote lecture that set the tone for the day. Elad Schneidman, from the Weizmann Institute, opened the conference by presenting a new class of models for decoding neural population activity. These models are based on sparse, nonlinear projections that require surprisingly small amounts of training data. They scale efficiently to populations of hundreds of neurons and are compatible with biologically plausible neural circuits. Schneidman also introduced a simple noise-driven learning rule and showed how homeostatic synaptic scaling enhances both efficiency and accuracy. The models not only represent a computational advance but also suggest a mechanism by which the brain might perform Bayesian inference and learn structure in high-dimensional neural code spaces.

On Wednesday, Tatyana Sharpee, from the Salk Institute, introduced a geometric perspective on learning in the brain. Her talk showed that neural responses in the hippocampus are organised according to a low-dimensional hyperbolic geometry, and that this geometry expands logarithmically as animals explore their environment. This expansion aligns with the theoretical maximum rate of information acquisition, indicating that neural representations continue to function optimally as they adapt with experience. Sharpee also proposed that similar geometric and entropic principles may apply to other biological systems, such as viral evolution and cell differentiation.

Thursday’s keynote was given by Tatjana Tchumatchenko, from the University of Bonn, who explored how neurons solve the complex problem of distributing thousands of protein species across extensive dendritic trees. Using a reaction-diffusion model, her team demonstrated that the task could be governed by an energy minimisation principle. Their predictions, based on computational simulations, aligned with large-scale experimental data on neuronal proteomes and translatomes. This suggests that neurons may favour energetically efficient strategies to maintain synaptic balance and support plasticity across their full morphology.

In addition to the keynotes, the invited speaker sessions brought technical depth and thematic diversity. Rafal Bogacz, from the University of Oxford, presented a predictive coding framework that respects biological constraints on synaptic plasticity. Áine Byrne, from University College Dublin, examined how the choice of neuron model influences the dynamics of gap junction coupled systems. Alexis Dubreuil (University of Bordeaux) explored how subpopulation structure in neural networks supports flexible computation. Stephanie Jones from Brown University introduced the Human Neocortical Neurosolver (HNN), a modelling tool designed to interpret EEG and MEG signals by linking them to circuit-level phenomena. Other invited speakers included Soledad Gonzalo Cogno, Anna Levina, Sukbin Lim, and Jonathan Touboul, each bringing distinct methodologies and perspectives to the ongoing dialogue between theory and neuroscience.

A Thriving Computational Neuroscience Ecosystem in Barcelona

The conference showcased a growing and increasingly diverse field. “The variety of approaches has expanded enormously,” noted Ernest Montbrió (University Pompeu Fabra), one of the organisers of the event, “and we’re seeing more interest every year from mathematicians, physicists, and engineers working on these problems. This diversity of perspectives truly enriches the programme.”

For Gemma Huguet (Universitat Politècnica de Catalunya-CRM), one of the local organisers, the strength of the Barcelona community played a central role. “Hosting this conference is a recognition of the vibrant neuro-computational scene we’ve built here. There’s a strong tradition of collaboration and interaction, and being able to welcome the ICMNS is also a way of giving visibility to the work we do.”

Her colleague Toni Guillamon (Universitat Politècnica de Catalunya-CRM) highlighted the evolution of the field itself: “Twenty years ago, computational neuroscience was focused on single cells or small networks. Today, with the explosion of data and new tools, the mathematical models have to be more sophisticated. We’re seeing more topology, more graph theory, and more mathematical depth, even if sometimes it’s hidden beneath the surface of the applications.”

The conference also placed a special emphasis on young researchers. As Alex Roxin (CRM) put it, “ICMNS offers a balanced environment. It’s not too small, not overwhelming. It’s the kind of setting where early-career scientists can step out of their comfort zone just enough to grow, present their work, get feedback, and connect with the wider community.”

ICMNS 2025 marked a decade of growth for a field still in expansion. As mathematics continues to reveal the hidden structures of brain function, and as neuroscience raises new questions for mathematical modelling, the future of this interdisciplinary partnership looks more promising than ever.

|

|

CRM CommPau Varela

|

When Symmetry Breaks the Rules: From Askey–Wilson Polynomials to Functions

Researchers Tom Koornwinder (U. Amsterdam) and Marta Mazzocco (ICREA-UPC-CRM) published a paper in Indagationes Mathematicae exploring DAHA symmetries. Their work shows that these symmetries shift Askey–Wilson polynomials into a continuous functional setting,and...

Homotopy Theory Conference Brings Together Diverse Research Perspectives

The Centre de Recerca Matemàtica hosted 75 mathematicians from over 20 countries for the Homotopy Structures in Barcelona conference, held February 9-13, 2026. Fourteen invited speakers presented research spanning rational equivariant cohomology theories, isovariant...

Three ICM speakers headline the first CRM Faculty Colloquium

On 19 February 2026, the Centre de Recerca Matemàtica inaugurated its first CRM Faculty Colloquium, a new quarterly event designed to bring together the mathematical community around the research carried out by scientists affiliated with the Centre. The CRM auditorium...

Trivial matemàtiques 11F-2026

Rescuing Data from the Pandemic: A Method to Correct Healthcare Shocks

When COVID-19 lockdowns disrupted healthcare in 2020, insurance companies discarded their data; claims had dropped 15%, and patterns made no sense. A new paper in Insurance: Mathematics and Economics shows how to rescue that information by...

L’exposició “Figures Visibles” s’inaugura a la FME-UPC

L'exposició "Figures Visibles", produïda pel CRM, s'ha inaugurat avui al vestíbul de la Facultat de Matemàtiques i Estadística (FME) de la UPC coincidint amb el Dia Internacional de la Nena i la Dona en la Ciència. La mostra recull la trajectòria...

Xavier Tolsa rep el Premi Ciutat de Barcelona per un resultat clau en matemàtica fonamental

L’investigador Xavier Tolsa (ICREA–UAB–CRM) ha estat guardonat amb el Premi Ciutat de Barcelona 2025 en la categoria de Ciències Fonamentals i Matemàtiques, un reconeixement que atorga l’Ajuntament de Barcelona i que enguany arriba a la seva 76a edició. L’acte de...

Axel Masó Returns to CRM as a Postdoctoral Researcher

Axel Masó returns to CRM as a postdoctoral researcher after a two-year stint at the Knowledge Transfer Unit. He joins the Mathematical Biology research group and KTU to work on the Neuromunt project, an interdisciplinary initiative that studies...

The 4th Barcelona Weekend on Operator Algebras: Open Problems, New Results, and Community

The 4th Barcelona Weekend on Operator Algebras, held at the CRM on January 30–31, 2026, brought together experts to discuss recent advances and open problems in the field.The event strengthened the exchange of ideas within the community and reinforced the CRM’s role...

From Phase Separation to Chromosome Architecture: Ander Movilla Joins CRM as Beatriu de Pinós Fellow

Ander Movilla has joined CRM as a Beatriu de Pinós postdoctoral fellow. Working with Tomás Alarcón, Movilla will develop mathematical models that capture not just the static architecture of DNA but its dynamic behaviour; how chromosome contacts shift as chemical marks...

Criteris de priorització de les sol·licituds dels ajuts Joan Oró per a la contractació de personal investigador predoctoral en formació (FI) 2026

A continuació podeu consultar la publicació dels criteris de priorització de les sol·licituds dels ajuts Joan Oró per a la contractació de personal investigador predoctoral en formació (FI 2026), dirigits a les universitats públiques i privades del...

Mathematics and Machine Learning: Barcelona Workshop Brings Disciplines Together

Over 100 researchers gathered at the Centre de Recerca Matemàtica to explore the mathematical foundations needed to understand modern artificial intelligence. The three-day workshop brought together mathematicians working on PDEs, probability, dynamical systems, and...