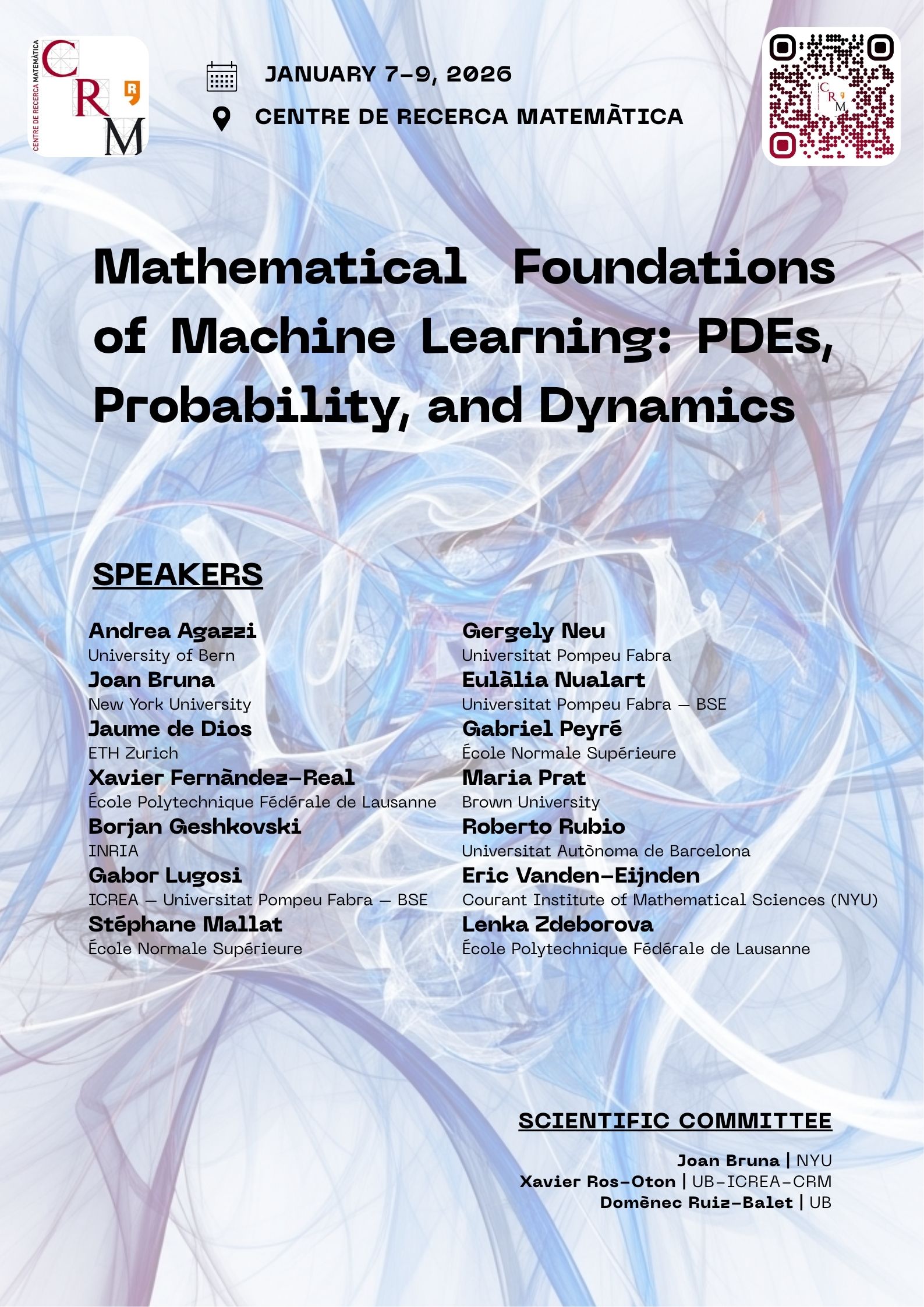

Mathematical Foundations of Machine Learning: PDEs, Probability, and Dynamics

Sign into January 09, 2026

Venue: Centre de Recerca Matemàtica

Room: Auditorium

Registration for this activity is free of charge; however, participation must be accepted by the organizers.

To proceed, participants are required to sign in and complete the registration process in advance.

The official notification regarding the acceptance of participation will be sent by 15th December.

DESCRIPTION

The aim of the workshop is not only to bring together a small group of researchers working at the intersection of these fields, but also to introduce a broader audience to the interactions between modern machine learning and tools from partial differential equations, dynamical systems, and probability.

VIDEOS

Scientific Committee

Joan Bruna | New York University

Xavier Ros-Oton | Universitat de Barcelona-ICREA-Centre de Recerca Matemàtica

Domènec Ruiz-Balet | Universitat de Barcelona

Speakers

Andrea Agazzi | University of Bern

Joan Bruna | New York University

Jaume de Dios | ETH Zurich

Xavier Fernàndez-Real | École Polytechnique Fédérale de Lausanne

Borjan Geshkovski | INRIA

Gabor Lugosi | ICREA – Universitat Pompeu Fabra – Barcelona School of Economics

Stéphane Mallat | École Normale Supérieure

Gergely Neu | Universitat Pompeu Fabra

Eulàlia Nualart | Universitat Pompeu Fabra – Barcelona School of Economics

Gabriel Peyré | École Normale Supérieure

Maria Prat | Brown University

Roberto Rubio | Universitat Autònoma de Barcelona

Eric Vanden-Eijnden | Courant Institute of Mathematical Sciences – New York University (NYU)

Lenka Zdeborova | École Polytechnique Fédérale de Lausanne

SCHEDULE

Wednesday January 7th | Thursday January 8th | Friday January 9th | |||

09:00 - 09:30 | Registration | ||||

09:30 - 10:30 | Introduction Joan Bruna New York University | Generalization in Attention-Based Models: Insights from Solvable High-Dimensional Theory Lenka Zdeborova École Polytechnique Fédérale de Lausanne | Transformers as interacting particle systems: small time steps, low temperature and long time asymptotics Borjan Geshkovski INRIA | ||

10:30 - 11:00 | Coffee Break | Coffee Break + Group Picture | Coffee Break | ||

11:00 - 12:00 | Introduction Joan Bruna New York University | Moment Guided Diffusion for Maximum Entropy Generation Stéphane Mallat École Normale Supérieure | Eric Vanden-Eijnden Courant Institute of Mathematical Sciences – New York University (NYU) | ||

12:00 - 12:45 | A PDE approach on Kernel Mean Discrepancy Flows Xavier Fernàndez-Real École Polytechnique Fédérale de Lausanne | Machine learning from a pure mathematician’s viewpoint Roberto Rubio Universitat Autònoma de Barcelona | Optimal transport distances for Markov chains Gergely Neu Universitat Pompeu Fabra | ||

13:00 - 14:30 | Lunch | ||||

14:30 - 15:15 | Diffusion Flows and Optimal Transport in Machine Learning Gabriel Peyré École Normale Supérieure | 14:30 - 15:00 | Jaume de Dios ETH Zurich | 14:30 - 15:15 | Convergence of continuous-time stochastic gradient descent with applications to deep neural networks Eulàlia Nualart Universitat Pompeu Fabra – Barcelona School of Economics |

15:30 - 16:00 | Break | 15:00 - 15:30 | ML-Based Approaches for Computer-Assisted Proofs in PDE Maria Prat Brown University | 15:15 - 16:00 | Gabor Lugosi ICREA – Universitat Pompeu Fabra – Barcelona School of Economics |

16:00 - 16:45 | Andrea Agazzi University of Bern | 15:30 - 16:00 | Break | 16:00 - 16:15 | Closing remarks |

16:00 - 17:00 | 16:00 - 16:30 | Debate: Mathematics and Machine Learning | |||

16:30 - 17:00 | Debate | ||||

registration

You will be asked to create a CRM web user account before registering to the activity through the following link (please note that it will be necessary to fill in both the personal and academic requested information in the web user intranet):

CRM USER CREATION

After creating your CRM user account, you can log in on the activity webpage to complete your registration, or by clicking the button and then selecting ‘Sign in’

REGISTER

Registration for this activity is free of charge; however, participation must be accepted by the organizers.

To proceed, participants are required to sign in and complete the registration process in advance.

The official notification regarding the acceptance of participation will be sent by 15th December.

LODGING INFORMATION

ON-CAMPUS AND BELLATERRA

BARCELONA AND OFF-CAMPUS

|

For inquiries about this event please contact the Scientific Events Coordinator Ms. Núria Hernández at nhernandez@crm.cat

|

CRM Events code of conduct

All activities organized by the CRM are required to comply with the following Code of Conduct.

CRM Code of Conduct

scam warning

We are aware of a number of current scams targeting participants at CRM activities concerning registration or accommodation bookings. If you are approached by a third party (eg travellerpoint.org, Conference Committee, Global Travel Experts or Royal Visit) asking for booking or payment details, please ignore them.

Please remember:

i) CRM never uses third parties to do our administration for events: messages will come directly from CRM staff

ii) CRM will never ask participants for credit card or bank details

iii) If you have any doubt about an email you receive please get in touch

Machine learning from a pure mathematician’s viewpoint

This talk is a personal account on how an outsider mathematician may want to think about some key concepts and ideas in machine learning.

A PDE approach on Kernel Mean Discrepancy Flows

We present recent results on the use of PDE techniques to study the well posedness and quantitative convergence of kernel mean discrepancy flows with respect to a fixed measure in various related settings, including Coulomb interactions, energy distance, and ReLU neural networks.

This talk is based on a forthcoming joint work with L. Chizat, M. Colombo, and R. Colombo.

Diffusion Flows and Optimal Transport in Machine Learning

In this talk, I will review how concepts from optimal transport can be applied to analyze seemingly unrelated machine learning methods for sampling and training neural networks. The focus is on using optimal transport to study dynamical flows in the space of probability distributions. The first example will be sampling by flow matching, which regresses advection fields. In its simplest case (diffusion models), this approach exhibits a gradientm structure similar to the displacement seen in optimal transport. I will then discuss Wasserstein gradient flows, where the flow minimizes a functional within the optimal transport geometry. This framework can be employed to model and understand the training dynamics of the probability distribution of neurons in two-layer networks. The final example will explore modeling the evolution of the probability distribution of tokens in deep transformers. This requiresmodifying the optimal transport structure to accommodate the softmax normalization inherent in attention mechanisms.

Convergence of continuous-time stochastic gradient descent with applications to deep neural networks

We study a continuous-time approximation of the stochastic gradient descent process for minimizing the population expected loss in learning problems. The main results establish general sufficient conditions for the convergence, extending the results of Chatterjee (2022) established for (nonstochastic) gradient descent. We show how the main result can be applied to the case of overparametrized neural network training. Join work with Gabor Lugosi (UPF)